Outline

Introduction

Decentralized AI training

Data as an asset

Decentralized broker

Decentralized education

Learning from experience

Introduction

AI has made significant progress, in particular, AlphaGo and ChatGPT. The underlying techniques are deep learning and reinforcement learning. Researchers and developers are the driving force, significantly among them, 1) Yoshua Bengio (U. of Montreal, Mila), Geoffrey Hinton (U of Toronto, Vector) and Yann LeCun (New York U., Meta) won the 2018 Turing Award “for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing”, https://awards.acm.org/about/2018-turing, and 2) Andrew Barto (U of Massachusetts, Amherst) and Richard Sutton (U of Alberta, Amii) won the 2024 Turing Award "for developing the conceptual and algorithmic foundations of reinforcement learning", https://awards.acm.org/about/2024-turing.

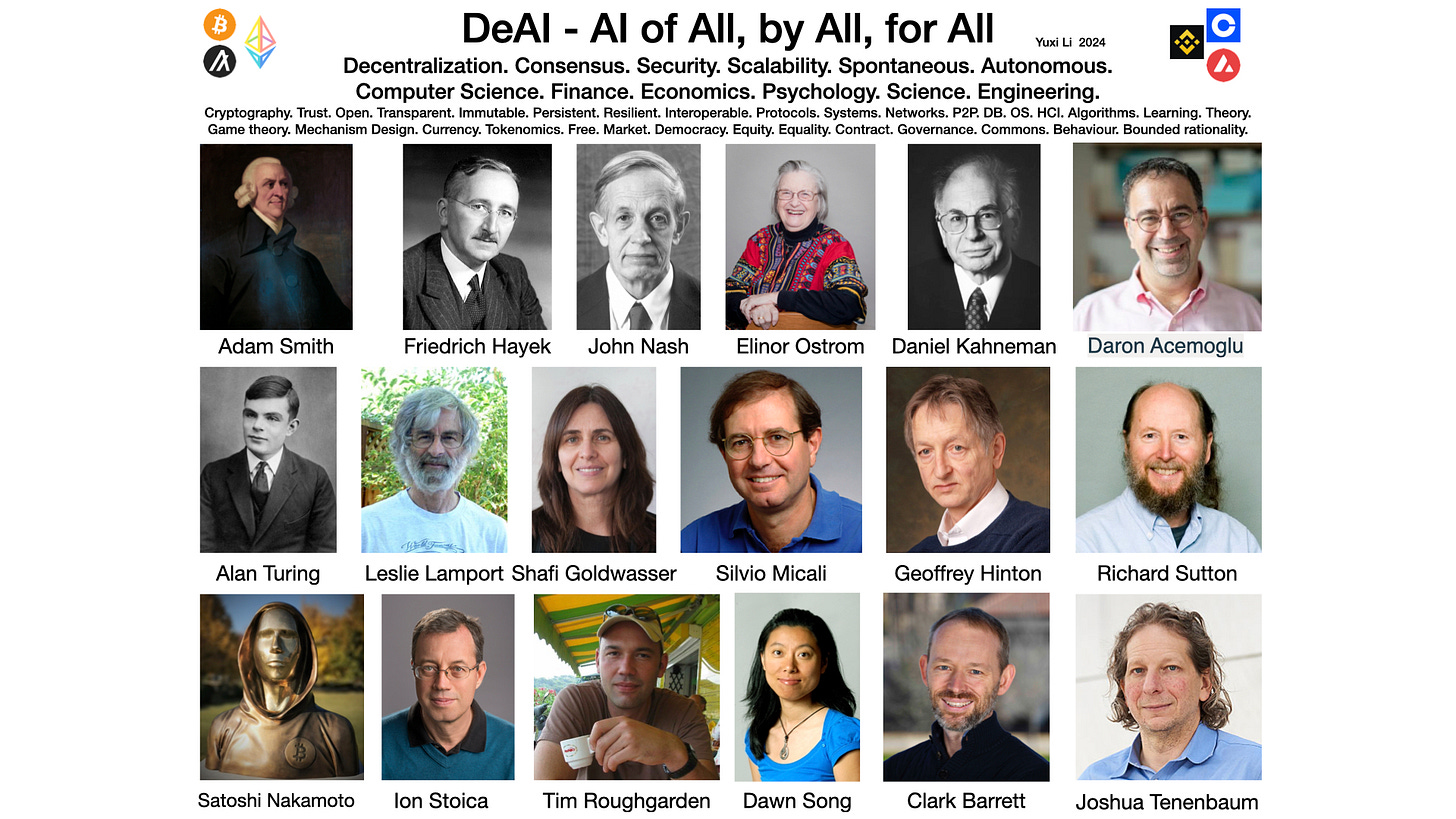

However, AI by itself may not solve the issue of social equality of prosperity resulting from technological progress to achieve the goal: AI for all, by all, of all. Inspired by the insights on democratic and inclusive institutions from Daron Acemoglu, Simon Johnson and James Robinson, laureates of the 2024 Nobel prize in economics, decentralized AI, i.e., the integration of AI and blockchain, is a promising solution. Moreover, blockchain calls for applications, esp. killer apps. Decentralized AI appears as an aspiring category of such applications.

We identified key techniques enabling decentralized AI: deep learning for function approximation, reinforcement learning for sequential decision making, blockchain for trustless yet secure distributed ledger, incentive mechanism for collaboration among selfish agents, human behavior for handling biases in human decision making, simulation for integrating analytic and empirical methodologies, interpretability for understandability, and software engineering for system implementation.

We briefly discuss several decentralized AI applications, highlighting learning from experience as both a solution framework and an application of decentralized AI.

Decentralized AI training

Large models become the norm. This makes it hard for normal people with normal resources to benefit from the AI progress as much as resource-rich people and companies. It is an equality issue. This calls for changes.

A decentralized, collaborative approach appears promising, so that each type of resources: AI talents, compute and storage can contribute and collaborate and benefit from the contributions.

Data as an asset

AI, in particular, large language models (LLMs), have made significant progress. However, the creators of the content on the Internet have not benefited from their contributions to the progress in AI, yet their efforts have been turned into training data to build AI / LLMs models, and the owners of the models are the beneficiaries. There are fairness issues. This calls for changes.

A decentralized approach with data as an asset appears promising. One example is learning, e.g., with Duolingo. Usually someone pays to learn, or by watching ads. However, actually, while s/he is learning, by interacting with the system, s/he is generating data, some of which become training data to improve the system. By treating data as an asset, s/he can earn credits or tokens while paying for learning.

Decentralized broker

Brokers are critical for everyday life, like for food, accommodation, transportation and e-commerce. However, brokers usually are taking too much, e.g., Uber may charge close to what a driver can earn. This is not reasonable. This calls for changes. A decentralized broker for ride sharing may increase drivers’ incomes and decrease passengers’ payments at the same time.

Decentralized education

A dominant part of education, including learning, teaching, tutoring, research, and conferences, is still old style, i.e., face to face. This causes accessibility and equality issues. Social interactions in person are essential for humans; however, an online approach suffices for the technical part. This calls for changes. A decentralization approach is desirable, by leveraging the progress in IT, in particular, the Internet and the mobile, and the progress in blockchain, in particular, tokenomics. To consider the social aspects, a hybrid approach with a physical component is necessary.

Learning from experience

David Silver (the key contributor of AlphaGo) and his former PhD advisor Richard Sutton (a 2024 Turing Award laureate) published a paper, highlighting that experience by interacting with the world is critical for further progress in AI, including LLMs; and reinforcement learning is a natural framework for learning from experience.

David Silver and Richard Sutton (2025), Welcome to the Era of Experience (goo.gle/3EiRKIH). A discussion with Rich Sutton moderated by Ajay Agrawal with others, https://www.youtube.com/watch?v=dhfJfQ5NueM.

This will be a big paradigm shift.

The current LLMs are not enough. Consider an example: swimming. Language and videos are not enough: we have to embody ourselves in the water, for interactions and for experience with the water, to learn how to swim and to enjoy it.

The last wave of AI is mainly about supervised learning, including self supervised learning underlying LLMs, with datasets like (x, y), y being a label. Learning from experience will be critical for the new wave of AI, with datasets like (state, action, reward, next state). How to collect experience data and how to design efficient learning algorithms, esp. with limited experience data, would be the next frontier research and business questions. Learning with Duolingo as discussed above is an example. Data as an asset may help with experience collection.

Decentralized AI, empowered by learning from experience, together with deep learning, reinforcement learning, blockchain, incentive mechanism, human behavior, simulation, interpretability, and software engineering, is expected to achieve the goal: AI for all, by all, of all.

nars https://cis.temple.edu/~pwang/NARS-Intro.html ?