Will synthetic data help?

A short answer is: it depends.

In the following, we discuss 1) ground-truth-in-the-loop, 2) simulation to reality gap, 3) AlphaZero vs ChatGPT, lessons from AlphaZero for large/language models (LMs), and 4) AI∞, AIx, and AIZero, a taxonomy of AI scenarios.

Ground-truth-in-the-loop

A successful system should be built on ground truth, although it may start with a learned, approximate model or simulator.

For an AI system, this includes trustworthy training data and evaluation feedback, and when planning is involved, a reliable world model. For a system with human users, human data and feedback are paramount, and human-in-the-loop is relevant or may be a must.

Prominent AI systems like search engines and large language models are built on valuable data, from the Internet and from user feedback. AlphaGo series and games AI have made remarkable achievements, where a perfect game rule, i.e. a model, is a core factor: it can generate high quality or perfect data including game scores.

We should not deploy an AI system trained purely from an imperfect simulator, especially for high stake systems like healthcare, robotics and autonomous vehicles. We evaluate a system with ground truth for dependable performance results. An imperfect system should not self-evaluate itself, e.g., a student should not self-grade the assignment.

Bridge Simulation to Reality Gap

In applications with physical systems like robotics and autonomous driving, where it is much easier to train an agent in simulation than in reality, simulation to reality gap, or sim-to-real, or sim2real, or reality gap, attract much attention recently.

A general purpose LM system approximates the underlying world model, i.e., there is a gap between a learned and the real model. We dub this “LM- to-real gap” or LM2real gap or LM to reality gap. Here LMs represent both Language Models and Large Models, which also include foundation models.

Some LM applications may tolerate more errors, like a writing aid; however, some may be high-stake and/or involve physical systems, e.g., healthcare like Med-PaLM 2 and robotics like SayCan. Our goal is to bridge such a gap, which is critical and challenging.

AlphaZero vs ChatGPT

Next we discuss if LLMs may borrow ideas from AlphaZero, which set a landmark in AI by tackling a very hard problem pursued by many researchers for decades.

The lessons from AlphaZero follow. 1) With a game rule, there is a perfect model, which can generate infinite high quality data, esp., reliable feedback. 2) This supports iterative improvements of the policy, with trial and error, using general policy iteration, by self play, to achieve a strong computer program. 3) Imitation learning is not enough: In and before AlphaGo, studies use expert games for training. However, self play RL achieves super-human performance in Go, chess, and shogi from scratch, without human knowledge, and also in many other games.

Moreover, Levine illustrates that RL can stitch parts of policies to attain a better policy. Levine shows that in a tech support application, RL can learn from several specialists for different aspects to improve the job.

For LLMs, there is no perfect rule for most problems, neither perfect feedback. Games and code generation appear as exceptions to some extent, with reliable feedback from a game engine and a code interpreter, respectively. The approach in ChatGPT can be treated as imitation learning.

Synthetic data from an LLM may help build a new LLM, however, whose capacity is bounded by the original LLM.

The above three subsections are basically copied from Iterative improvements from feedback for language models, published online in July 2023.

AI∞, AIx, and AIZero

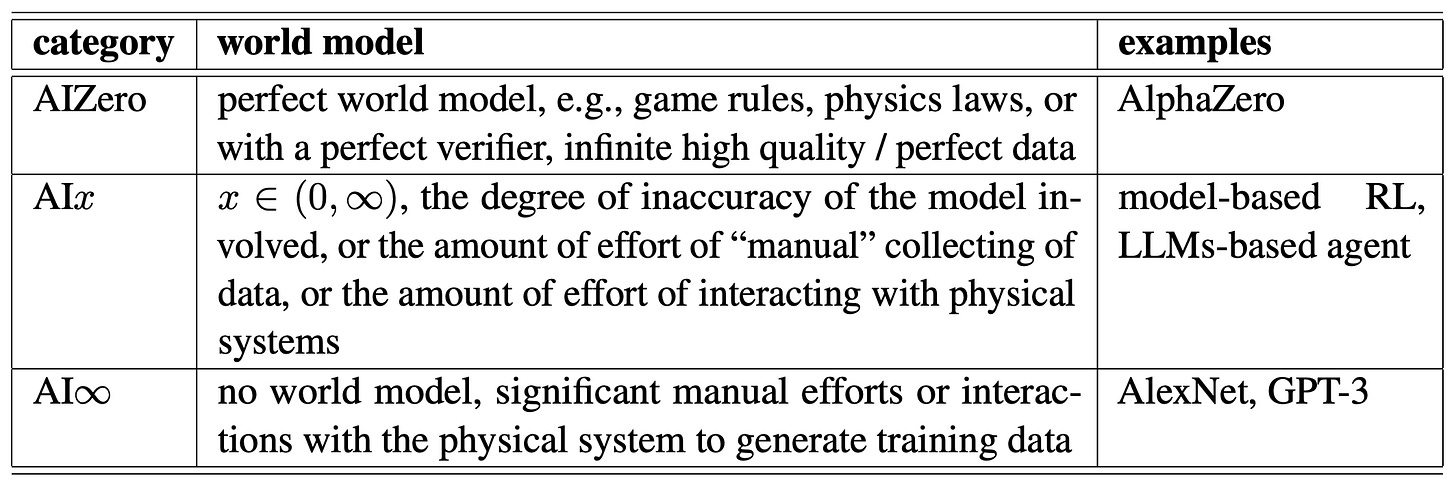

For the current practice in AI, we make the following categorization: AI∞, AIx, and AIZero.

Many deep learning, or big data methods, like AlexNet, relies on huge amount of labelled data. Model-free RL interacts with the environment online or offline to collect a huge amount of training data. We classify them as AI∞, which requires significant manual efforts or interactions with the physical system to generate training data.

When there is a perfect model, we can build a perfect simulator to generate training data, in a digital or virtual world, which is much less costly than in a physical world. AlphaGo Zero/AlphaZero and those dynamic systems with perfect partial differential equations and those with perfect verifiers are in this category. We classify them as AIZero, i.e., once the data generator is set up, it can generate data without manual effort, and without the cost of interacting with the physical system.

Model-free RL for Atari games, e.g., with The Arcade Learning Environment (ALE), appears as AI∞ for the agent. However, for the whole system, we can say it is AIZero, since we have a perfect simulator.

There are ways to help improve data efficiency, e.g., simulation, digital twins, self-supervised learning, and for RL in particular, intrinsic motivation, auxiliary signals, and model-based methods. We call such approaches as AIx, where x is a number between 0 and ∞, indicating the degree of inaccuracy of the model involved, or the amount of effort of “manual” collecting of data, or the amount of effort of interacting with physical systems. Admittedly, x is only loosely defined. AIx approaches AIZero as the underlying model approaches perfect. AI∞ implies there is no model involved.

GPT-3 is in AIx. Since InstructGPT involves human feedback, it is a combination of AI∞ and AIx. AlphaGo involves human demonstration data and a perfect model/simulator, so it is a combination of AI∞ and AIZero.

The above is basically a copy from Reinforcement Learning in Practice: Opportunities and Challenges, published online in April 2022.

Summary

With a perfect model, which can generate infinite high quality or perfect training data, we can build a very strong or even perfect AI, like AlphaZero. This is AIZero

Without a perfect model, like for most NLP/LLM problems, we need to collect high quality data to achieve a competent AI. For an LLM trained from synthetic data generated by another LLM, its capacity is bounded by the LLM generating the data. This is AI∞ or AIx.

We should always respect the principle of ground-truth-in-the-loop. Our goal is to bridge the simulation to reality gap for AI, or LM-to-real gap for LMs.